Publications

Filter by type:

Cooperative Localization of UAVs in Multi-Robot Systems Using Deep Learning-Based Detection

Abstract

The integration of multiple Uncrewed Aerial Vehicles (UAVs) across diverse domains, including agriculture, disaster management, and environmental monitoring, has demonstrated immense potential due to their operational flexibility and advanced maneuverability. However, achieving precise localization remains a significant challenge, particularly when these vehicles operate in close proximity. Standard Global Navigation Satellite System (GNSS) sensors typically provide a positional accuracy of approximately 2.5 meters, and environments with GNSS disruptions exacerbate this challenge. This paper introduces a novel cooperative localization framework designed to enhance localization accuracy in multi-robot systems comprising UAVs and Unmanned Ground Vehicles (UGVs). The proposed method leverages deep learning-based detection, specifically utilizing the YOLOv8 convolutional neural network, to enable real-time object detection and localization. By integrating perception with Kalman Filtering (KF), the approach achieves improved localization accuracy, even in challenging environments, thus advancing the state-of-the-art in cooperative multi-robot systems.

Enhancing Fixed-Wing UAS Autonomy: A ROS2 Package for XPlane

Abstract

This paper presents the development and deployment of a ROS2 package tailored for seamless integration with the XPlane flight simulator. It discusses the underlying motivations driving its creation, emphasizing the necessity for a robust framework to facilitate interactions between ROS2-based robotic systems and the XPlane environment. The package's architecture, components, and functionalities are comprehensively elucidated, showcasing its capabilities in accessing vehicle state data, and controlling the aircraft. Detailed installation instructions are provided to ensure a smooth setup process, accompanied by practical demonstrations of autonomous operations within the XPlane simulator environment. Overall, this paper serves as a comprehensive resource for researchers and developers seeking to leverage ROS2 for interfacing with the XPlane simulator, enabling diverse applications in robotics and aerospace.

Adaptive Perception Control for Aerial Robots with Twin Delayed DDPG

Abstract

Robotic perception is commonly assisted by convolutional neural networks. However, these networks are static in nature and do not adjust to changes in the environment. Additionally, these are computationally complex and impose latency in inference. We propose an adaptive perception system that changes in response to the robot's requirements. The perception controller has been designed using a recently proposed reinforcement learning technique called Twin Delayed DDPG (TD3). Our proposed method outperformed the baseline approaches..

A Closed Loop Perception Subsystem for small Unmanned Aerial Systems

Abstract

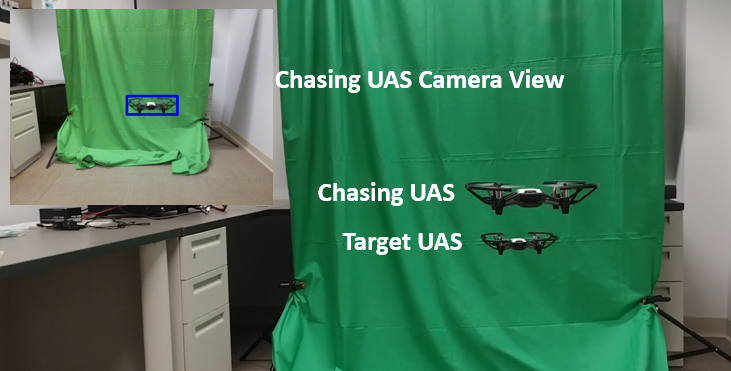

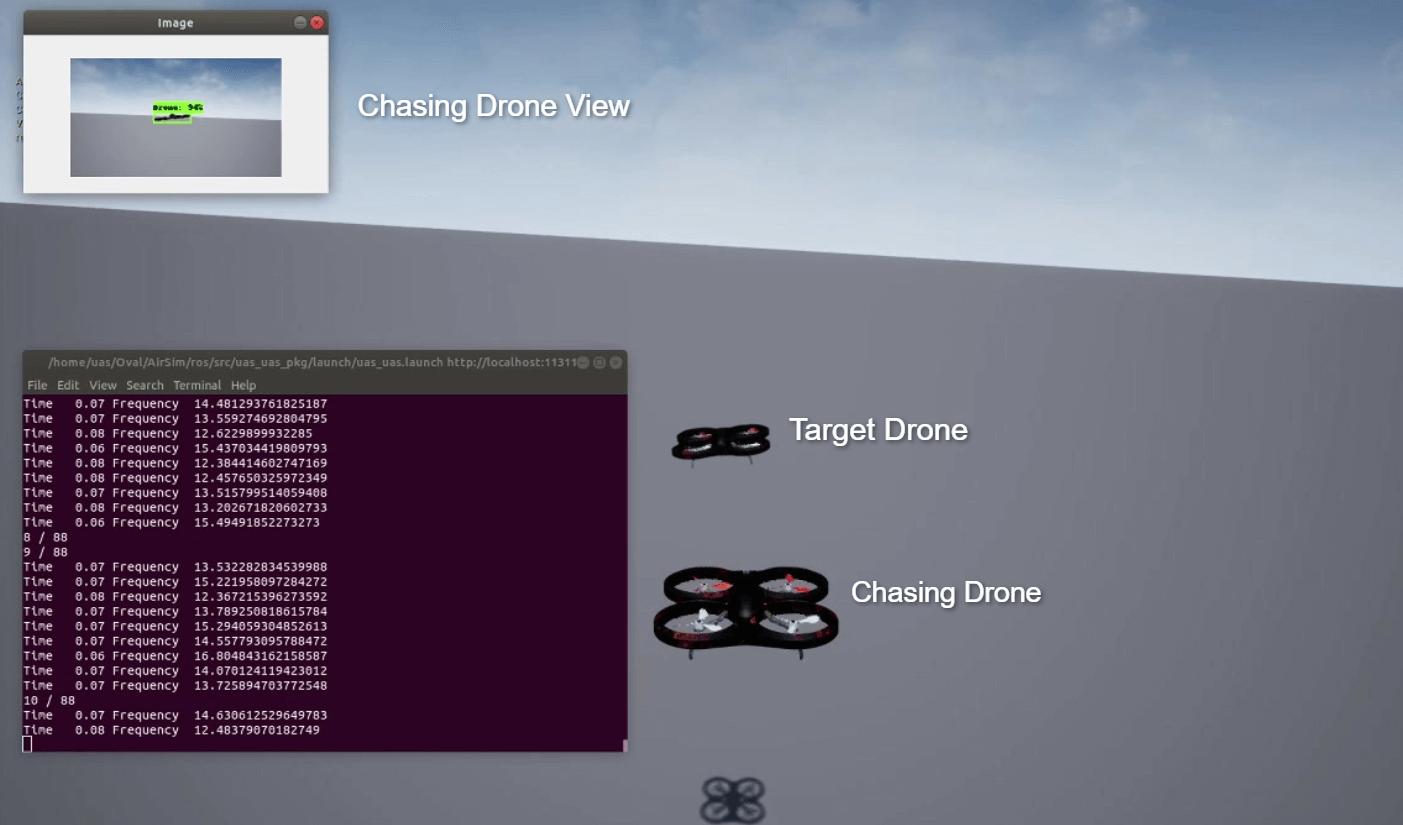

The perception subsystem in modern autonomous systems use convolutional neural networks (CNNs) for their high accuracy. The structure of such networks is static and there is an inverse relationship between their accuracy and resource consumption, such as inference speed and energy utilized. This poses a challenge while designing the perception subsystem for agile autonomous systems such as small unmanned aerial systems which have limited resources while operating in real world dynamic scenarios. Existing approaches design the perception subsystems for the worst case scenario which can lead to inefficient resource utilization that can hamper the mission capabilities of such systems. At the same time it is difficult to predict the worst case condition during design time especially for systems that operate in stochastic and dynamic real world scenarios. Therefore, we have developed a closed-loop perception subsystem that can dynamically change the structure of its CNN such that the accuracy and latency adapt to the requirements of a given scenario. The proposed system was tested on an UAS-UAS tracking scenario. It was demonstrated that the chasing UAS with the proposed closed loop perception subsystem was able to successfully track the target UAS more accurately than the baseline approach, with static CNNs, while consuming less energy. Furthermore, the adaptive latency of the proposed method lets the chasing UAS track the target UAS at higher velocities compared to baseline methods.

Autonomous UAV Landing on a Moving UAV using Machine Learning

Abstract

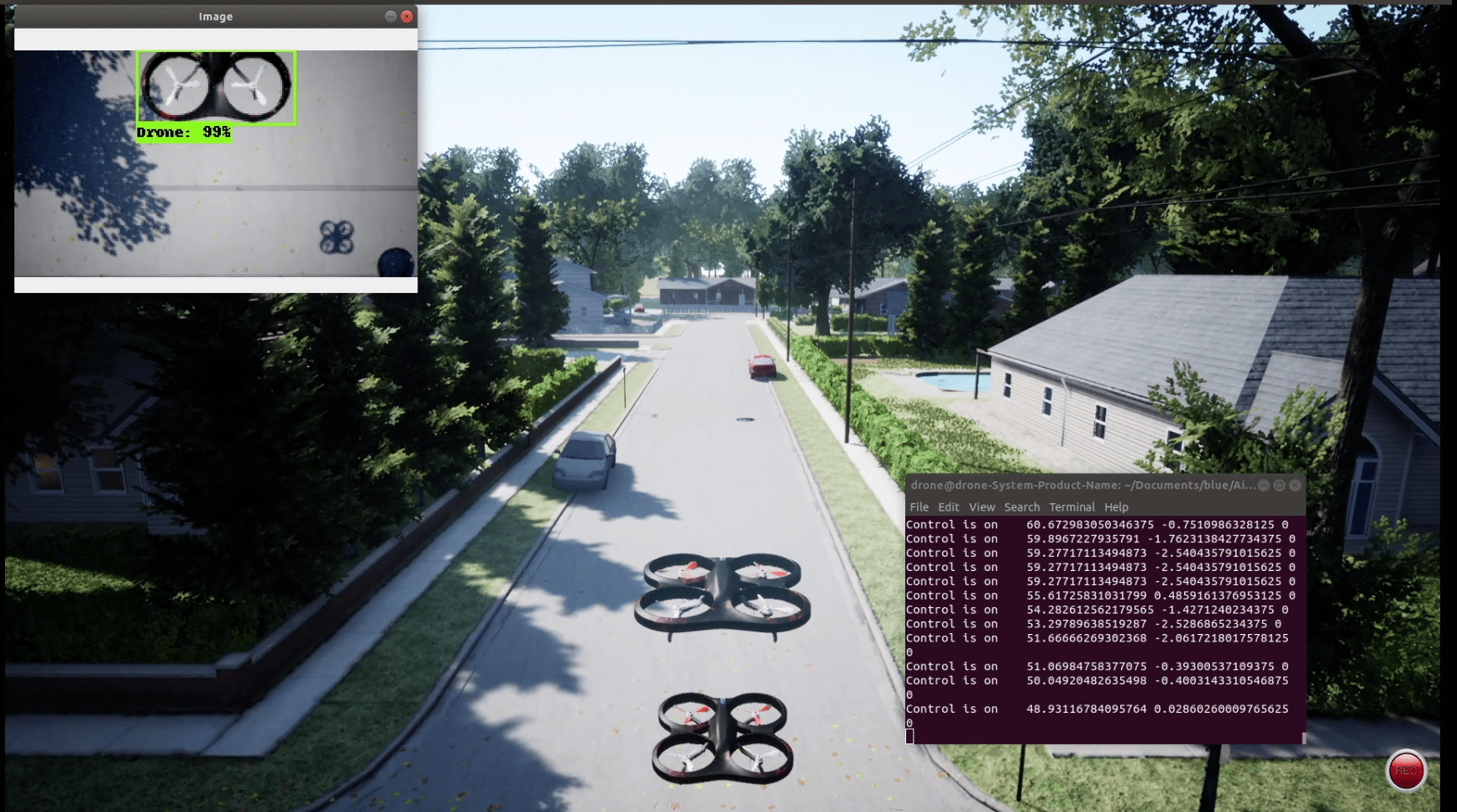

Autonomous landing of aUAV(agent UAV) on anotherUAV(base UAV) is critical to extendUAVmanipulation but has not been studied well. The objective of this research was to examinethe possibility of the autonomous landing of aUAVon anotherUAV. The whole landing processwas conducted in a simulated environment (AirSim). The Single Shot MultiBox Detector (SSD)network was trained with a customized dataset and embedded into theagent UAVto recognizethebase UAV, and landing was manipulated with a proportional-integral-derivative controllerwith the aid of robot operating system (ROS). Thebase UAVwas operated at the velocities of1, 2, 3, and 5 km/h and the trajectories of straight line, square, and ellipse. The results showedthe SSD network detected and localized thebase UAVwith over 99% accuracy. The landingwas successfully achieved with the four different velocities. Such results demonstrate greatpotential of the autonomous and movable landing for future aerial robot applications.

Measuring the Perceived Three-Dimensional Location of Virtual Objects in Optical See-Through Augmented Reality

Abstract

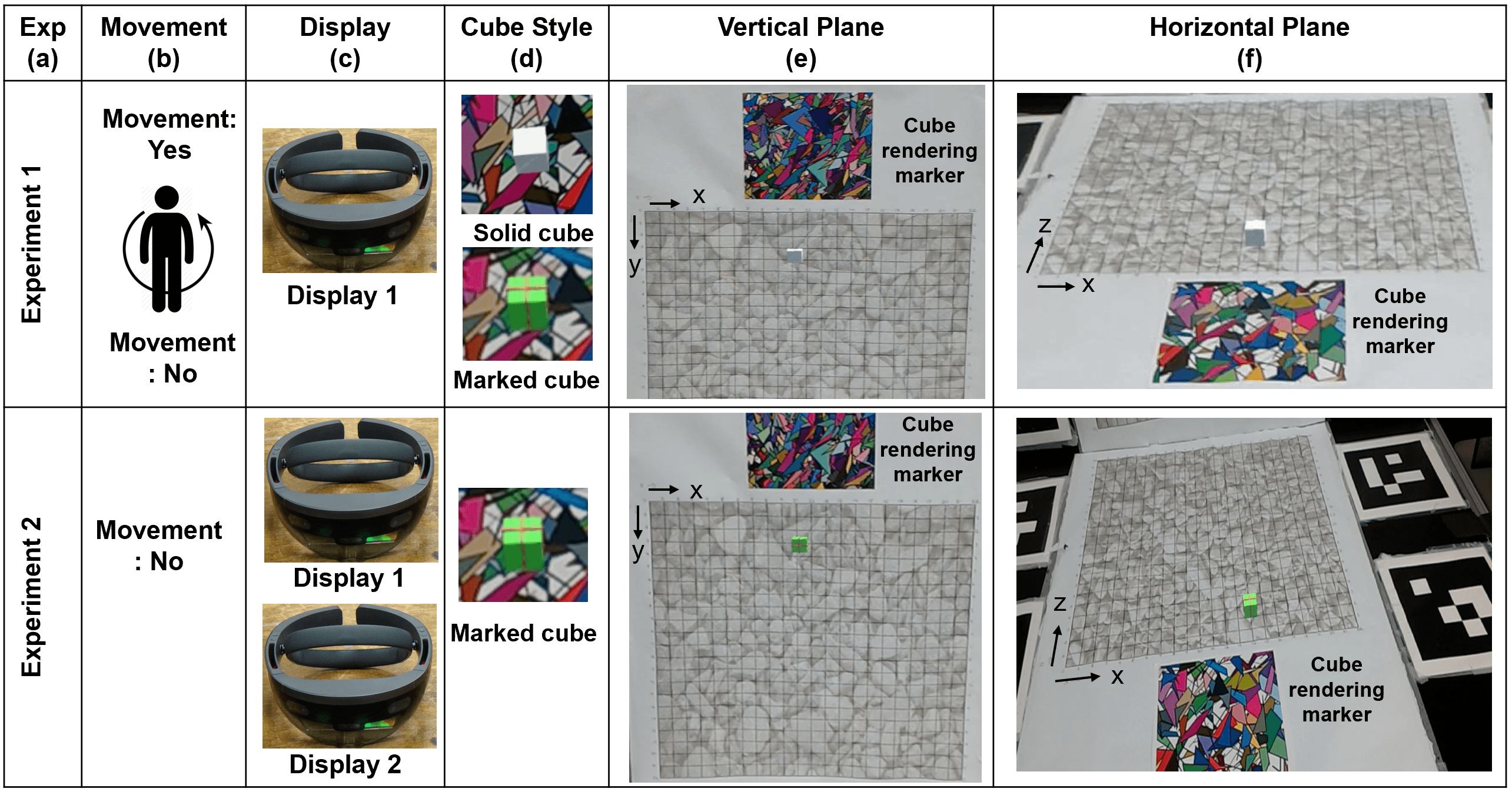

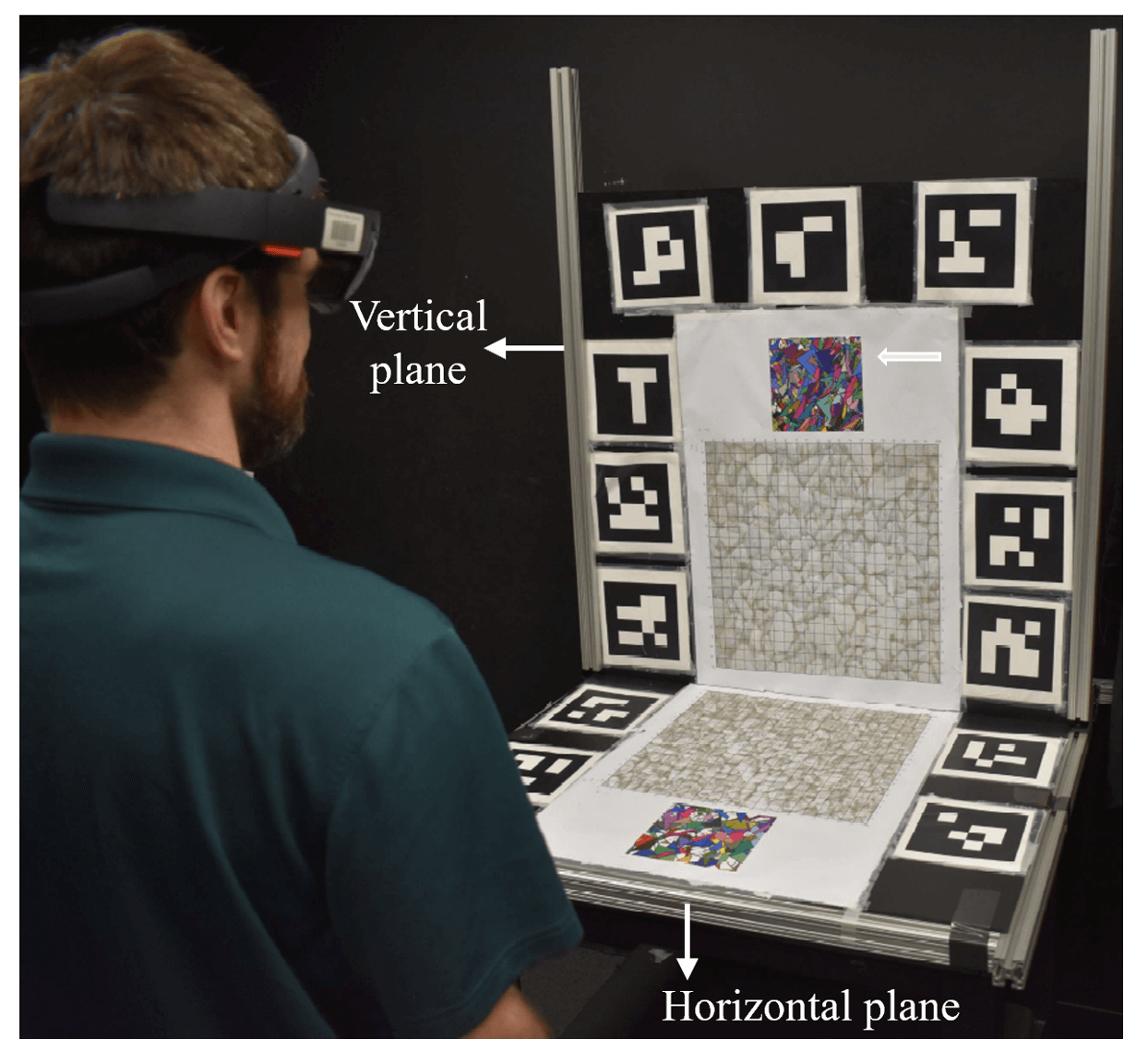

For optical see-through augmented reality (AR), a new method for measuring the perceived three-dimensional location of virtual objects is presented, where participants verbally report a virtual object location relative to both a vertical and horizontal grid. The method is tested with a small (1.95 1.95 1.95 cm) virtual object at distances of 50 to 80 cm, viewed through a Microsoft HoloLens 1 st generation AR display. Two experiments examine two different virtual object designs, whether turning in a circle between reported object locations disrupts HoloLens tracking, and whether accuracy errors, including a rightward bias and underestimated depth, might be due to systematic errors that are restricted to a particular display. Turning in a circle did not disrupt HoloLens tracking, and testing with a second display did not suggest systematic errors restricted to a particular display. Instead, the experiments are consistent with the hypothesis that, when looking downwards at a horizontal plane, HoloLens 1st generation displays exhibit a systematic rightward perceptual bias. Precision analysis suggests that the method could measure the perceived location of a virtual object within an accuracy of less than 1 mm.

On the Effectiveness of Quantization and Pruning on the Performance of FPGAs-based NN Temperature Estimation

Abstract

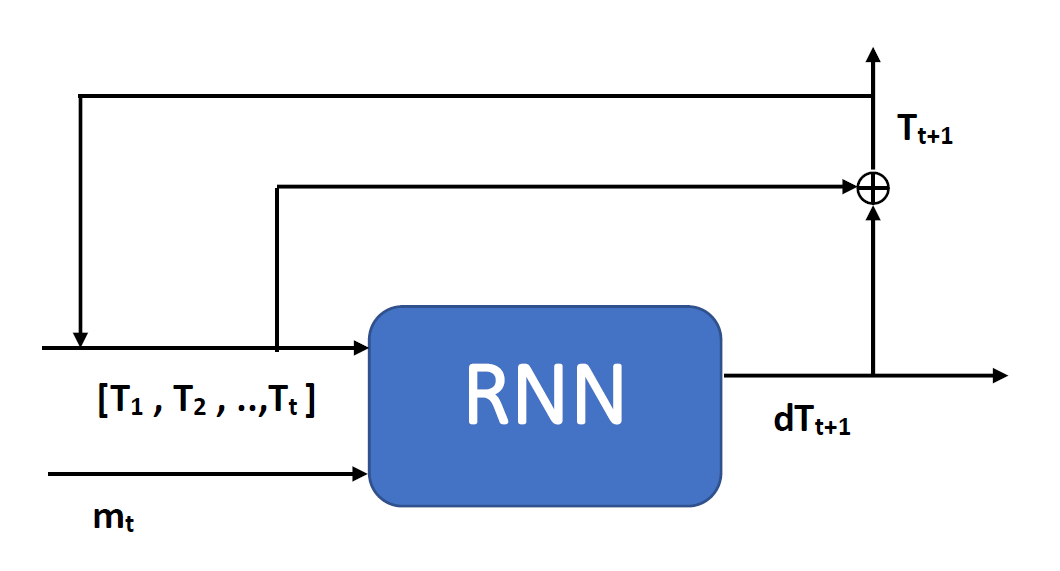

A well-functioning thermal management system on the chip requires knowledge of the current temperature and the potential changes in temperature in the near future. This information is important for ensuring proactive thermal management on the chip. However, the limited number of sensors on the chip makes it difficult to accomplish this task. Hence we proposed a neural network based approach to predict the temperature map of the chip. To solve the problem, we have implemented two different neural networks, one is a feedforward network and the other uses recurrent neural networks. Our proposed method requires only performance counters measure to predict the temperature map of the chip during the runtime. Each of the two models shows promising results regarding the estimation of the temperature map on the chip. The recurrent neural network outperformed the feedforward neural network. Furthermore, both networks have been quantized, pruned, and the feedforward network has been compiled into FPGA logic. Therefore, the network could be embedded in the chip, whether it be an ASIC or an FPGA.

A Method for Measuring the Perceived Location of Virtual Content in Optical See Through Augmented Reality

Abstract

For optical, see-through augmented reality (AR), a new method for measuring the perceived three-dimensional location of a small virtual object is presented, where participants verbally report the virtual object's location relative to both a horizontal and vertical grid. The method is tested with a Microsoft HoloLens AR display, and examines two different virtual object designs, whether turning in a circle between reported object locations disrupts HoloLens tracking, and whether accuracy errors found with a HoloLens display might be due to systematic errors that are restricted to that particular display. Turning in a circle did not disrupt HoloLens tracking, and a second HoloLens did not suggest systematic errors restricted to a specific display. The proposed method could measure the perceived location of a virtual object to a precision of ~1 mm.

Assuring Learning-Enabled Components in Small Unmanned Aircraft Systems

Abstract

Aviation has a remarkable safety record ensured by strict processes, rules, certifications,and regulations, in which formal methods have played a role in large companies developingcommercial aerospace vehicles and related cyber-physical systems (CPS). This has not been thecase for small Unmanned Aircraft Systems (UAS) that are still largely unregulated, uncertified,and not fully integrated into the national airspace. However, emerging UAS missions interactclosely with the environment and utilize learning-enabled components (LECs), such as neuralnetworks (NNs) for many tasks. Applying formal methods in this context will enable improvedsafety and ease the immersion of UASs into the national airspace.

We develop UAS that interact closely with the environment, interact with human users,and require precise plans, navigation, and controllers. They also generally leverage LECs forperception and data collection. However, the impact of ML-based LECs on UAS performanceis still an area of research. We have developed an advanced simulator incorporating ML-basedperception in highly dynamic situations requiring advanced control strategies to study theimpacts of ML-based perception on holistic UAS performance.

In other work, we have developed a WebGME-based software framework called theAssurance-based Learning-enabled CPS (ALC) toolchain for designing CPS that incorporateLECs, including the Neural Network Verification (NNV) formal verification tool. In this paper,we present two key developments: 1) a quantification of the impact of ML-based perception onholistic (physical and cyber) UAS performance, and 2) a discussion of challenges in applyingthese methods in this environment to guarantee UAS performance under various Neural Net(NN) strategies, executed at various computational rates, and with vehicles moving at variousspeeds. We demonstrate that vehicle dynamics, rate of perception execution, the design of thecontroller, and the design of the NN all contributed to total vehicle performance.

Automatic Identification of Broiler Mortality using Image Processing Technology

Abstract

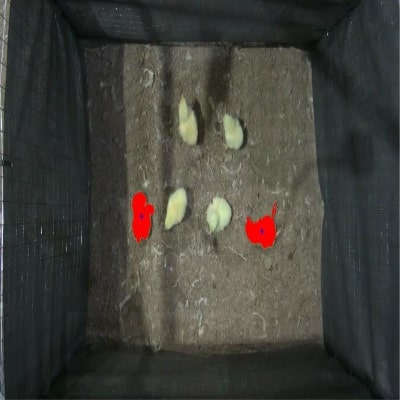

Identifying dead birds is a time and labor consuming task in commercial broiler production. Automatic mortality identification not only helps to save the time and labor, but also offers a critical procedure/component for autonomous mortality removal systems. The objectives of this study were to 1) investigate the accuracy of automatically identifying dead broilers at two stocking densities through processing thermal and visible images, and 2) delineate the dynamic body surface temperature drops after euthanasia. The tests were conducted on a weekly basis over two 9-week production cycles in a commercial broiler house. A 0.8mx0.6m floor area was fenced using chicken wires to accommodate experimental broilers. A dual-function camera was installed above the fenced area and simultaneously took thermal and visible videos of the broilers for 20 min at each stocking density. An algorithm was developed to extract pixels of live broilers in thermal images and pixels of all (live and dead) broilers in visible images. The algorithm further detected pixels of dead birds by subtracting the two processed thermal and visible images taken at the same time, and reported the coordinates of the dead broilers. The results show that the accuracy of mortality identification was 90.7% for the regular stocking density and 95.0% for the low stocking density, respectively, for 5-week old or younger broilers. The accuracy decreased for older broilers due to less body-background temperature gradients and more body interactions among birds. The body surface temperatures dropped more slowly for older broilers than younger ones. Body surface temperature requires approximately 1.7 hour for 1-week old broiler to reach 1°C above the background level, while over 6 hours for 4-week and 7-week old broilers. In conclusion, the system and algorithm developed in this study successfully identified broiler mortalities at promising accuracies for younger birds ( less than 5-week old), while requires improvement for older ones.

Towards Training an Agent in Augmented Reality World with Reinforcement Learning

Abstract

Reinforcement learning (RL) helps an agent to learn an optimal path within a specific environment while maximizing its performance. Reinforcement learning (RL) plays a crucial role on training an agent to accomplish a specific job in an environment. To train an agent an optimal policy, the robot must go through intensive training which is not cost-effective in the real-world. A cost-effective solution is required for training an agent by using a virtual environment so that the agent learns an optimal policy, which can be used in virtual as well as real environment for reaching the goal state. In this paper, a new method is purposed to train a physical robot to evade mix of physical and virtual obstacles to reach a desired goal state using optimal policy obtained by training the robot in an augmented reality (AR) world with one of the active reinforcement learning (RL) techniques, known as Q-learning.

A Collaborative Filtering Recommender System with Randomized Learning Rate and Regularized Parameter

Abstract

Recommender systems with the approach of collaborative filtering by using the algorithms of machine learning gives better optimized results. But selecting the appropriate learning rate and regularized parameter is not an easy task. RMSE changes from one set of these values to others. The best set of these parameters has to be selected so that the RMSE must be optimized. In this paper we proposed a method to resolve this problem. Our proposed system selects appropriate learning rate and regularized parameter for given data.

A Novel Method to Achieve Optimization in Facial Expression Recognition Using HMM

Abstract

Human-Computer Interaction is an emerging field of Computer Science where, Computer Vision, especially facial expression recognition occupies an essential role. There are so many approaches to resolve this problem, among them HMM is a considerable one. This paper aims to achieve optimization in both, the usage of number of states and the time complexity of HMM runtime. It also focusses to enable parallel processing which aims to process more than one image simultaneously.